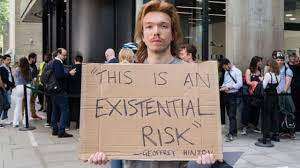

Experts warn artificial intelligence could lead to human extinction

Extinction of the human race can be cause by Artificial intelligence, experts – including the heads of OpenAI and Google Deepmind have warned. Dozens of people backed a statement posted on the AI Safety Center’s website.

“Reducing the risk of AI extinction must be a global priority alongside other society-wide risks such as pandemics and nuclear war,” it wrote. But others said the fear was overblown.

Sam Altman, CEO of OpenAI, producer of ChatGPT, Demis Hassabis, CEO of Google DeepMind, and Dario Amodei of Anthropic all support this claim. The risks of super-intelligent AI was warned by Dr Geoffrey Hinton previously, also supports the call.

Yoshua Bengio, a professor of computer science at the University of Montreal, also signed.

Dr. Hinton, Professor Bengio and Professor Yann LeCunn of NYU are often described as the “godfather of AI” for their groundbreaking work in the field – for which they together won the 2018 Turing Prize. , the award recognizes outstanding contributions to computer science.

But Professor LeCunn, who also works at Meta, said these apocalyptic warnings were overblown.

Many other experts agree that fears that AI will wipe out humanity are unrealistic and distract from issues like bias in already problematic systems.

Arvind Narayanan, a computer scientist at Princeton University, previously told the BBC that science fiction-like disaster scenarios are unrealistic: “Current AI can’t afford to take the risks at all. As a result, it distracts attention from the short-term view of the harms of AI.”

Elizabeth Renieris, senior research associate at the Oxford Institute for Ethics in AI, told Sources she is more worried about the risks at the moment. “Advances in AI will amplify the extent to which automated decision-making is biased, discriminatory, monopolistic, or unfair while being impenetrable and indisputable,” she said.

They will “cause an exponential increase in the volume and spread of misinformation, thereby distorting reality and eroding public trust, while deepening discontent.” equality, especially for those who are still on the wrong side of the digital divide.” Ms. Renieris said many AI tools “fundamentally revolve around” “the entire human experience to date”.

Many were trained in man-made content, writing, art, and music that they could then imitate – and their creators “transferred enormous wealth and power in a efficiency from the public sector to a handful of private institutions”.

OpenAI CEO Sam Altman testifies before the Senate Judiciary Subcommittee on Privacy, Technology, and Law for a watchdog hearing to review rules governing artificial intelligence (AI) in the building from the Dirksen Senate Office in Washington, DC USA, May 16, 2023

Media coverage of the supposed “existential” threat from AI has been running high since March 2023 when experts, including Tesla boss Elon Musk, signed an open letter calling for stop developing the next generation of AI technology.

This letter asks whether we should “develop non-human minds that can eventually outnumber us, smarter, out-of-date and replace us”.

In contrast, the new campaign has a very short statement, designed to “open the door to discussion”. The statement compares the risk to the risk posed by a nuclear war. In a blog post, OpenAI recently suggested that superintelligence could be tuned in a similar way to nuclear power:

“Perhaps eventually we’ll need something like the International Atomic Energy Agency (IAEA) for superintelligence efforts,” the firm wrote.

“Be sure”

Sam Altman and Google CEO Sundar Pichai are among the tech leaders who recently discussed AI regulation with the Prime Minister. Speaking to the press about the latest AI risk warning, Rishi Sunak highlighted the benefits to the economy and society.

“You’ve seen recently that it’s helping paralyzed people walk, discovering new antibiotics, but we need to make sure it’s done in a safe and secure way,” he said. “That’s why I met with CEOs of big AI companies last week to discuss what protections we need to put in place, what kind of regulations we need to put in place to keep people safe. we.

“People will be concerned about reports that AI poses existential risks, like pandemics or nuclear war.

“I want them to be reassured that the government is looking at this very carefully.”

Sunak said he recently discussed the issue with other leaders at the G7 summit of major industrialized nations and will raise the issue again in the United States soon.

G7 recently created an AI working group.